Listen to the podcast :

While we progress toward the modern technological world, process automation with the help of Artificial Intelligence development and Machine Learning development has become the backbone of various industries as it promises efficiency, speed, and cost-effectiveness. On the other hand, automation bias has become a significant concern. This should be a concern because as businesses and organizations continue to adopt process automation, many are unaware of the potential consequences these automated systems may have on fairness and equality.

Though automation brings efficiency and convenience, it can also introduce subtle yet powerful biases that perpetuate discrimination and inequality. Understanding the dynamics of automation bias is crucial in addressing these issues and creating systems that promote fairness for all.

Want to discover how to create unbiased automated systems with ethical AI?

What is Automation Bias?

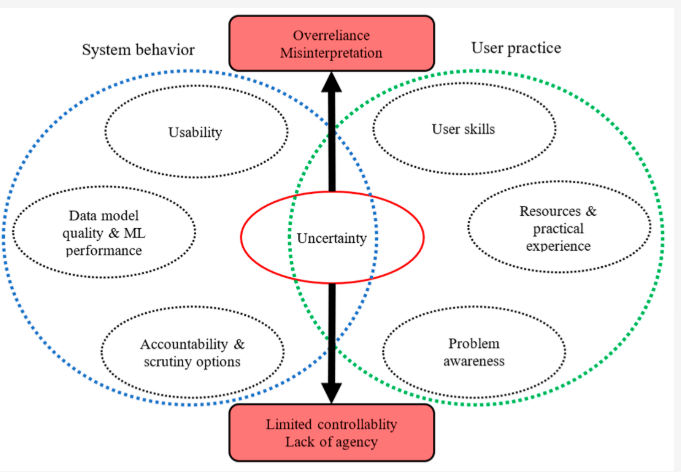

Automation bias refers to the tendency of humans to over-rely on automated systems, assuming their decisions are correct without questioning them. In a world where machines are often seen as infallible, this trust can lead to harmful consequences.

In practice, automation bias means that humans may ignore their own judgments and rely on decisions made by an automated system, even when those systems are flawed or biased. For example, in hiring processes, many companies are increasingly relying on automated systems to screen resumes or evaluate candidates. These systems, while efficient, can perpetuate biases if they are trained on biased data.

Source: MDPI on reporting Advanced ML and Data Mining

As a result, applicants from underrepresented groups may be unfairly screened out, even though their qualifications are equal to or superior to others. This is a clear example of how automation bias can directly contribute to inequality.

How Process Automation Can Amplify Discrimination

While process automation can streamline operations and improve efficiency, it can also amplify algorithmic bias if not carefully managed. Algorithmic bias refers to the systematic favoritism or discrimination inherent in automated systems. If the data used to train these algorithms contains biases — such as gender, race, or socio-economic biases — the automated system will likely inherit and even amplify these biases.

The implications of automation bias are far-reaching and can have serious consequences for individuals and society as a whole. It can lead to:

- Reduced opportunities: Biased algorithms can limit access to employment, education, and financial services for certain groups.

- Reinforcement of stereotypes: Automated systems that perpetuate stereotypes can further marginalize already disadvantaged communities.

- Erosion of trust: As people become aware of the potential for bias in automated systems, it can lead to a loss of trust in institutions and technology.

Consider how AI and inequality intersect in real-world scenarios. In the criminal justice system, predictive algorithms are used to assess the likelihood of reoffending. However, if the data these algorithms rely on reflects historical biases, such as disproportionately higher arrest rates in certain communities, the AI bias in these systems can result in unfair outcomes, such as longer sentences for minorities. This is a stark example of how automation bias can perpetuate societal inequalities.

Similarly, in healthcare, process automation can have unintended consequences. If an algorithm is trained on data that over-represents certain demographics while under-representing others, the system may make inaccurate predictions or recommendations. This could lead to discrepancies in medical care, with marginalized groups receiving inferior treatment or not being diagnosed in a timely manner.

Some known cases of AI algorithm biases

It’s true that relying solely on AI can sometimes lead to unfair outcomes. Here is a report published by The Greenlining Institute that showcases a few examples of how algorithms have been biased in different situations:

Government Programs:

- Michigan’s Unemployment System: Michigan used an algorithm to detect fraud in unemployment claims. Unfortunately, the system wrongly accused thousands of people, leading to fines, bankruptcies, and even foreclosures. It turned out the algorithm often made mistakes and there was no one to double-check its decisions.

- Arkansas Medicaid: An algorithm was used to determine who qualified for Medicaid benefits in Arkansas. This resulted in many people losing access to essential medical care. The problem? The algorithm had errors, and it was very difficult for people to appeal its decisions.

Employment:

- Amazon’s Hiring Tool: Amazon tried to create an algorithm to help them hire the best employees. However, the algorithm ended up favoring men over women. It seems the algorithm learned from past hiring data, which may have contained biases.

Healthcare:

- Unequal Treatment: Even when algorithms are designed to be fair, they can be used in ways that create bias. For example, a hospital used an algorithm to decide which patients needed extra care. Unfortunately, the way they used it meant that Black patients had to be much sicker than White patients to receive the same level of attention.

Education:

- Grading Bias in the UK: During the pandemic, schools in the UK used an algorithm to give students grades when exams were canceled. However, the algorithm ended up giving lower grades to students from lower-income families, showing how algorithms can sometimes reinforce existing inequalities.

Housing:

- Unfair Screening: Many landlords use algorithms to screen potential tenants. One such algorithm unfairly prevented a mother from renting an apartment because her son had a minor offense on his record that was later dismissed.

- Disinvestment in Detroit: An algorithm was used in Detroit to decide which neighborhoods should receive investments and improvements. This resulted in predominantly Black and poor neighborhoods being denied resources, showing how algorithms can worsen existing social problems.

These examples show that while algorithms can be powerful tools, they need to be carefully designed and used responsibly to avoid unfairness and discrimination. We need to ensure that algorithms are used to promote fairness and equality, not perpetuate existing biases.

Tackling Automation Bias and Algorithmic Bias

Addressing automation bias and its potential to perpetuate algorithmic bias requires a multi-faceted approach. First, it’s essential to ensure that the data used to train algorithms is diverse and representative of all demographic groups. Without diverse data, algorithms risk reinforcing existing biases rather than addressing them.

Second, transparency and accountability must be built into AI and automation systems. Developers should be clear about how these systems function and be open to scrutiny. Regular audits of algorithms can help identify areas where bias may be present, and corrective measures can be implemented before these biases have harmful effects.

Finally, AI and inequality can be minimized by promoting fairness and inclusivity throughout the design and deployment phases. By integrating ethical AI practices, companies can ensure that their process automation solutions are not only efficient but also just and equitable.

Identify and address automation bias in your processes with our expert guidance

The Role of WeblineIndia in Addressing Automation Bias

For businesses looking to harness the power of process automation while ensuring fairness, transparency, and ethical responsibility, WeblineIndia stands out as a trusted partner with its RelyShore Model of outsourcing. As experts in integrating cutting-edge technology with ethical considerations, WeblineIndia excels in addressing the challenges of automation bias. They are committed to creating AI-driven solutions that minimize algorithmic bias and promote AI and inequality awareness.

WeblineIndia’s approach is unique in that it balances the benefits of automation with a focus on algorithm ethics, ensuring that the technology is used responsibly and does not perpetuate discrimination. Whether it’s developing customized software, implementing process automation solutions, or advising on AI bias mitigation strategies, WeblineIndia’s team prioritizes quality, fairness, and social responsibility.

Ready to build unbiased software solutions powered by AI?

Choose WeblineIndia and we can rest assured that you are partnering with a service provider who not only understands the technical intricacies of AI but also prioritizes ethical considerations. Together, we can build a future where technology works for everyone, not against them.

In conclusion, while process automation offers immense potential, it’s crucial to be aware of the risks posed by automation bias which WeblineIndia takes care of. We can ensure that AI and automation systems are tools for good, promoting fairness, inclusivity, and equality in all areas of life by understanding how algorithmic bias works and taking steps to address it. Contact us and we help you navigate this challenge, creating solutions that uphold the highest standards of ethical responsibility.

Social Hashtags

#AutomationBias #EthicalAI #BiasInTech #StopAlgorithmicDiscrimination #AI #Automation #AIAutomation #SoftwareSolutions

Want to build inclusive and fair AI-driven solutions for your business?

Testimonials: Hear It Straight From Our Customers

Our development processes delivers dynamic solutions to tackle business challenges, optimize costs, and drive digital transformation. Expert-backed solutions enhance client retention and online presence, with proven success stories highlighting real-world problem-solving through innovative applications. Our esteemed clients just experienced it.