Push notifications are everywhere—whether it’s a breaking news alert, a weather update, or a “Your order has shipped” message, they drive engagement and deliver real-time value to users. But designing a push notification system that can handle billions of messages across platforms, while staying performant and reliable, is no walk in the park.

Modern applications often need to push time-sensitive information to users on mobile devices (iOS/Android), desktops (macOS/Windows), and browsers (Chrome, Firefox, Safari). Each of these platforms comes with its own protocol, delivery constraints, and rate limits. Now layer in business rules like user preferences, segmentation, throttling, retries, and localization—you’re suddenly staring at a non-trivial distributed system.

A basic implementation might work at low scale or in a proof-of-concept. But what happens when you’ve got 50 million users, each with multiple devices, and marketing wants to run campaigns to 10% of your audience every few minutes? That’s where things start to fall apart if your architecture wasn’t built for it from the ground up.

This guide walks through the architectural blueprint of building a scalable, fault-tolerant, and extensible push notification system. It’ll focus on cross-platform delivery (APNs, FCM, WebPush), system-level concerns like queuing and retries, as well as how to tune for performance under high load.

The goal is to equip you with the mental model and design patterns needed to build a system that doesn’t buckle under pressure—and can evolve with your product needs.

System Requirements

Functional Requirements

At its core, the push notification system must deliver messages to end-user devices. But “delivery” isn’t enough. Here’s what the system should actually do:

- Multi-platform delivery: Support Apple Push Notification service (APNs), Firebase Cloud Messaging (FCM), and Web Push Protocol (WPP).

- Device registration & management: Store device tokens mapped to users, platforms, and applications.

- Targeting capabilities: Send messages to specific users, device groups, user segments, or all users (broadcast).

- Scheduling: Allow messages to be sent immediately or at a future scheduled time.

- Rate limiting: Throttle messages based on platform constraints or internal business rules.

- Retry logic: Automatically retry failed deliveries with exponential backoff or custom retry policies.

- User preferences: Respect opt-in/opt-out status, quiet hours, or specific channel types (e.g., promotional vs. transactional).

- Auditing & analytics: Track delivery success/failure, opens (when possible), and diagnostic metadata for troubleshooting.

Non-Functional Requirements

These are the backbone concerns—miss these, and your platform will collapse under real-world usage.

- Scalability: System must handle spikes of millions of messages per minute.

- Availability: Targeting 99.99% uptime, especially for critical paths like device registration and delivery pipelines.

- Latency: Sub-second processing for transactional (e.g., OTP, security alerts); tolerable delay (5-15s) for batch marketing messages.

- Durability: Messages must not be lost in the event of node or service failure.

- Security: End-to-end protection of user data, authentication to 3rd-party services, encryption of payloads in transit and at rest.

- Multi-tenancy: Ability to support multiple applications/customers with isolated data and delivery pipelines.

Constraints & Assumptions

Defining boundaries is critical to reduce architectural ambiguity.

- Assume platform push providers (APNs, FCM, WebPush) are external and act as black boxes with published SLAs.

- Message payloads are small (2KB–4KB), with most logic handled on the client side.

- There’s an existing user identity platform that exposes device-to-user mappings and user preferences.

- The system won’t guarantee delivery—once it hands off to APNs/FCM, responsibility is theirs.

- System will run in a cloud-native environment (Kubernetes or managed containers, autoscaling, etc.).

Core Use Case and Delivery Landscape

Before diving into architecture, it’s important to clarify what this system is solving for—because “push notifications” mean different things in different business contexts. This section explores the real-world operational landscape, who uses the system, and how the scale impacts design choices.

Primary Business Use Case

Let’s define a typical, high-impact use case: a large-scale B2C e-commerce platform wants to send real-time push notifications across mobile and web to drive user engagement, alert users of order status updates, or trigger behavior-based reminders (e.g., abandoned cart nudges). There are also internal use cases—ops alerts, fraud systems, and partner integrations. But the core driver is high-throughput, user-centric messaging with varying criticality levels.

Users and Notification Channels

The end users of the system are not just customers receiving the push messages. There are multiple actors:

- Marketers: Run large campaigns, schedule and segment user cohorts.

- Product teams: Trigger contextual notifications from app behavior (in-app milestones, gamification loops).

- Backend systems: Automatically trigger transactional messages like OTPs, payment confirmations, or delivery alerts.

On the receiving end, each user can have multiple device types:

- Mobile apps: iOS via APNs and Android via FCM.

- Web browsers: Via Web Push Protocol (VAPID-based), typically for desktop/laptop users.

- Third-party services: In some cases, integrations with third-party delivery channels like WhatsApp or in-app messaging overlays are involved—but out of scope for this system.

Volume and Scale Considerations

Here’s what the system is expected to handle in real-world scale:

- 100M+ registered devices across platforms, with 5–10% active on a daily basis.

- Transactional events: 3–5M messages/day, with hard latency SLAs (e.g., OTP within 3 seconds).

- Campaigns: Burst sends to 10–20 million users in under 5 minutes, often triggered via dashboard or backend job queues.

- Peak concurrency: 200K+ messages/minute during Black Friday-type events or during viral spikes.

This isn’t about sending one message to a user—it’s about sending millions of tailored messages across thousands of nodes, with reliability, retries, and minimal delay. The design must accommodate this kind of dynamic workload, which means stateless core services, distributed queues, and horizontal scalability at every tier.

High-Level System Architecture

The architecture for a scalable push notification system revolves around three principles: decoupling, parallelism, and platform-specific delivery. At scale, monolithic designs fall apart—so this architecture embraces a microservices-based, event-driven approach that can fan out, throttle, retry, and audit billions of messages with minimal bottlenecks.

Let’s walk through the core subsystems, data flow, and platform-specific abstractions that make it all work.

Component Overview

The architecture is divided into the following logical components:

- Ingestion API: Receives message requests—batch or transactional—validates input, enqueues messages into the pipeline.

- Routing Engine: Looks up devices, splits messages per device-platform pair, enqueues them into respective queues.

- Platform Delivery Workers: Dedicated microservices for APNs, FCM, and WebPush. These handle connection management, retries, batching, and rate-limiting.

- Message Queue (Broker Layer): High-throughput broker (e.g., Kafka, RabbitMQ, or Google Pub/Sub) for decoupling producers from delivery logic.

- Device Registry Service: Stores device metadata, user mappings, token validity, platform type, and opt-in status.

- Preference & Rules Engine: Evaluates user notification settings, suppression rules (quiet hours, frequency caps), or channel-specific overrides.

- Scheduler: For delayed or time-window-based message delivery.

- Monitoring & Feedback Loop: Captures platform-level delivery receipts, errors, and analytics; handles APNs feedback, FCM uninstalls, etc.

High-Level Architecture Diagram

+------------------+

| Ingestion API |

+--------+---------+

|

v

+---------------------+

| Routing Engine |

+--------+------------+

|

v

+---------------------+

| Message Broker |

| (Kafka / RabbitMQ) |

+--------+------------+

|

+--------------------------+--------------------------+

| | |

v v v

+-------------+ +--------------+ +----------------+

| APNs Worker | | FCM Worker | | WebPush Worker |

+------+------+ +------+-------+ +--------+-------+

| | |

v v v

+------------------+ +---------------------+ +------------------------+

| Apple Push Notif | | Firebase Cloud Msg | | Web Push Service (VAPID)|

| Service (APNs) | | (FCM) | | |

+------------------+ +---------------------+ +------------------------+

+------------------------+ +--------------------------+

| Device Registry Svc | <------> | User Preferences Engine |

+------------------------+ +--------------------------+

+---------------------------+

| Scheduler / Campaign Svc |

+---------------------------+

+---------------------------+

| Monitoring / Analytics |

| (Logs, Receipts, Metrics) |

+---------------------------+

Key Architectural Patterns

- Event-driven architecture: Message flow is fully asynchronous. Producers (APIs, internal systems) are decoupled from delivery using pub/sub or messaging queues.

- Platform-specific abstraction: Workers are tailored per platform. Each manages its own rate limits, payload formats, retries, and API auth.

- Backpressure support: Message brokers must handle surges without dropping data. Buffering, dead-letter queues, and consumer autoscaling are built in.

- Idempotency & deduplication: Messages must carry unique IDs to avoid double-send issues, especially on retries.

- Failure isolation: A platform outage (say, APNs) shouldn’t cascade and stall messages for FCM/WebPush. Each worker pool is isolated and fault-tolerant.

This architecture can horizontally scale each component independently—more workers for APNs during iOS campaign bursts, or more routing nodes during ingestion spikes. Each tier can be monitored, throttled, or restarted without impacting the full system.

Need Help Architecting Push at Scale?

Planning to roll out push notifications across millions of users, but unsure how to scale delivery or manage cross-platform quirks?

We can help you design a battle-tested push system tailored to your product’s growth and performance needs.

Database Design & Token Management

Push notification systems live or die by their ability to manage millions of device tokens, user preferences, and message states efficiently. This section breaks down how to design a database schema that scales horizontally, maintains delivery consistency, and supports real-time targeting and filtering.

Core Data Entities

At minimum, the following entities are required:

- Users: Logical user identity (UID), tied to one or many devices.

- Devices: Platform-specific device records with tokens, app version, last seen, etc.

- Preferences: User-specific opt-in/opt-out flags, channel settings, quiet hours, etc.

- Messages: History of sent notifications, their delivery status, and metadata for troubleshooting/auditing.

Entity Relationship Model (ERD)

Here’s how the entities relate at a high level:

+--------+ 1 +---------+ M +-----------+ | Users |---------------<| Devices |--------------->| Preferences| +--------+ +---------+ +-----------+ | | | | 1 | | | M | v v +-------------+ +--------------+ | Notifications| | Delivery Logs| +-------------+ +--------------+

Table Schemas (Simplified)

users (

id UUID PRIMARY KEY,

email TEXT UNIQUE NOT NULL,

created_at TIMESTAMP DEFAULT NOW()

)

-- Index: unique email lookup

-- CREATE UNIQUE INDEX idx_users_email ON users(email);

devices (

id UUID PRIMARY KEY,

user_id UUID NOT NULL REFERENCES users(id) ON DELETE CASCADE,

platform ENUM('ios', 'android', 'web') NOT NULL,

token TEXT UNIQUE NOT NULL,

app_version TEXT,

last_active TIMESTAMP,

is_active BOOLEAN DEFAULT true,

created_at TIMESTAMP DEFAULT NOW()

)

-- Indexes:

-- CREATE INDEX idx_devices_user_platform ON devices(user_id, platform);

-- CREATE UNIQUE INDEX idx_devices_token ON devices(token);

preferences (

id UUID PRIMARY KEY,

user_id UUID NOT NULL REFERENCES users(id) ON DELETE CASCADE,

channel ENUM('transactional', 'marketing') NOT NULL,

enabled BOOLEAN DEFAULT true,

quiet_hours JSONB,

updated_at TIMESTAMP DEFAULT NOW()

)

-- Index: preference lookup

-- CREATE UNIQUE INDEX idx_prefs_user_channel ON preferences(user_id, channel);

notifications (

id UUID PRIMARY KEY,

title TEXT,

body TEXT,

payload JSONB,

scheduled_at TIMESTAMP,

priority ENUM('high', 'normal', 'low') DEFAULT 'normal',

created_by TEXT,

created_at TIMESTAMP DEFAULT NOW()

)

delivery_logs (

id UUID PRIMARY KEY,

notification_id UUID NOT NULL REFERENCES notifications(id) ON DELETE CASCADE,

device_id UUID NOT NULL REFERENCES devices(id) ON DELETE CASCADE,

status ENUM('pending', 'delivered', 'failed', 'dropped') DEFAULT 'pending',

attempt_count INT DEFAULT 0,

response_code TEXT,

error_message TEXT,

delivered_at TIMESTAMP

)

-- Indexes:

-- CREATE INDEX idx_logs_device_status ON delivery_logs(device_id, status);

-- CREATE INDEX idx_logs_notif_status ON delivery_logs(notification_id, status);

Sample Rows (Illustrative Only)

users

--------------------------------------------------------------

id | email

--------------------------------------+-------------------------

5b6e...d1 | user@example.com

devices

--------------------------------------------------------------

id | user_id | platform | token | is_active

----------+----------+----------+---------------+------------

f7a9...91 | 5b6e...d1| ios | abc123token...| true

preferences

--------------------------------------------------------------

id | user_id | channel | enabled | quiet_hours

----------+----------+----------------+---------+-------------

c1f3...72 | 5b6e...d1| marketing | false | {"start":"22:00","end":"07:00"}

notifications

--------------------------------------------------------------

id | title | priority | scheduled_at

----------+----------------+----------+--------------

e93f...55 | Order Shipped! | high | 2025-04-22 13:00:00

delivery_logs

--------------------------------------------------------------

id | notification_id | device_id | status | attempt_count

----------+-----------------+-----------+------------+---------------

d95b...11 | e93f...55 | f7a9...91 | delivered | 1

Token Management Best Practices

- De-duplicate tokens: Multiple devices may send the same token (especially on Android); deduplicate on registration.

- Token expiration handling: Periodically validate tokens via feedback services (e.g., APNs Feedback, FCM unregistered errors) and mark inactive.

- Soft deletes: Use `is_active` flags rather than hard deletes to avoid orphaned delivery logs.

- Index wisely: Devices should be indexed on `(user_id, platform)` and `(token)` for fast lookups and de-duping.

- Avoid user joins at runtime: Keep all targeting data (tokens, opt-ins) in fast-access stores like Redis or pre-joined views for high-speed delivery runs.

Multi-Tenancy & Isolation Strategies

If supporting multiple apps or clients:

- Tenant column strategy: Add a `tenant_id` column to every table and enforce row-level isolation.

- Logical DB isolation: Use separate schemas or databases per tenant if SLA and data sensitivity require it.

- Write-path separation: Isolate high-priority transactional messages from bulk/marketing queues at the DB layer to avoid noisy neighbor issues.

Partitioning & Scale

At scale, certain tables (like delivery_logs) will grow rapidly. Consider:

- Time-based partitioning: Shard logs monthly or weekly to avoid bloated indexes.

- Archival policy: Move older logs to cold storage (e.g., S3 + Athena) for audit access without impacting live performance.

- Token cache: Sync active device tokens into Redis or DynamoDB for high-throughput dispatch loops.

Redis Token Cache Schema

Redis is used as a read-optimized, in-memory token lookup layer. It avoids hitting the primary DB during message dispatch and supports constant-time lookups across massive user/device sets.

Primary Use Cases:

- Rapid fan-out of device tokens per user

- Lookup by user segments (e.g., active iOS devices)

- Token deduplication and expiry control

- TTL enforcement for inactive or rotated tokens

Recommended Key Structure

Key: push:user::tokens Type: Redis Set Value: token:: TTL: Optional (based on last_active)

Example:

Key: push:user:5b6ed...d1:tokens

Value: {

"token:ios:abc123token...",

"token:web:def456token...",

"token:android:xyz789token..."

}

Alternate Key for Direct Token Lookup

Key: push:token::

Type: Hash

Fields: {

user_id: ,

app_version: ,

last_seen: ,

is_active: true/false

}

TTL: 30 days from last_seen

Example:

Key: push:token:ios:abc123token...

Value: {

user_id: "5b6ed...d1",

app_version: "6.4.1",

last_seen: "2025-04-21T18:11:45Z",

is_active: true

}

Best Practices

- Bulk load from DB: Cache warm-up jobs should sync active tokens from the relational DB on startup or via CDC (Change Data Capture).

- Invalidate on unregister: When a device is unregistered or token is marked invalid by APNs/FCM, delete the Redis entry.

- Keep TTL lean: Tokens not used in 30–60 days should expire to prevent cache bloat.

- Set-level fanout: Use Redis sets to get all tokens per user and perform message fan-out efficiently in worker processes.

- Multi-tenant support: Prefix all keys with tenant ID if operating in a multi-tenant environment.

This cache complements the persistent DB layer by serving as the low-latency lookup tier during the critical send loop. It also helps segment delivery flows (e.g., only send to users with active iOS tokens) without expensive relational joins or full scans.

Need Help Structuring Device Data for Scale?

Struggling to manage millions of device tokens, delivery logs, and opt-in preferences across mobile and web?

We can help you design a robust data layer—SQL and Redis included—that keeps your push system fast, consistent, and always ready to deliver.

Detailed Component Design

Each part of the system has its own responsibility—but the way they work together defines whether your push architecture can scale or crumble. In this section, we’ll break down the core components layer by layer: data handling, service logic, delivery workers, and optional UI hooks.

1. Data Layer (Storage, Access, and Caching)

- Relational DB: Stores core entities (users, devices, preferences, notifications, delivery logs).

- Redis: Serves as a high-speed lookup layer for active tokens, recent deliveries, and real-time suppression rules.

- ORM or Data Access Layer: Use a clean abstraction to enforce validation, field defaults, and schema constraints. Avoid writing raw SQL in delivery loops.

- Read replica usage: Route high-volume read queries (e.g., fan-out lookups) to replicas to prevent write contention.

2. Application Layer (Routing, Filtering, Segmentation)

The app layer is the brain of the system. It knows what to send, to whom, when, and under what conditions. This is where business logic lives.

- Ingestion API: Handles incoming requests from internal services (OTP, marketing tools, transactional triggers).

- Message routing service: Decides which users/devices should get which message. Joins message metadata with Redis token sets.

- Preference & rules engine: Enforces opt-in/out, channel limits (e.g., max 2 marketing pings per day), and time windows (e.g., no messages at 2AM).

- Rate controller: Throttles message dispatch per tenant, per platform, per region. Integrates with Redis counters or sliding window algorithms.

3. Integration Layer (Delivery Services & Workers)

Each platform (APNs, FCM, WebPush) has its quirks—so don’t abstract them behind a generic interface. Instead, treat them as first-class citizens with dedicated delivery services.

- APNs Worker: Uses token-based authentication via JWT. Handles push over HTTP/2. Manages TCP connection pool and rate limits from Apple.

- FCM Worker: Auth via service accounts. Batches messages per topic or user group. Tracks per-device errors (e.g., unregistered, quota exceeded).

- WebPush Worker: Implements VAPID, encrypts payloads using elliptic curve cryptography, and sends to endpoint URIs directly.

- Retry queue: Any failed delivery gets re-queued with exponential backoff. DLQs (dead-letter queues) capture persistent failures for inspection.

4. UI Layer (Optional, Admin-Facing)

Not all systems need this, but for in-house marketing ops or product teams, a lightweight dashboard can be useful.

- Campaign Composer: Create and schedule notification blasts to user segments with WYSIWYG tools.

- Preview & Targeting: Live test on test devices or A/B buckets before a full rollout.

- Activity audit: Real-time dashboard showing what’s in the queue, what’s delivered, and what failed (with reason codes).

Design Tips

- Break each worker into stateless microservices that can scale horizontally.

- Don’t reuse the same service for both delivery and feedback (e.g., APNs receipts). Isolate those flows for resilience.

- Add structured logging and correlation IDs at every layer for traceability.

Scalability Considerations

Push notification systems don’t just need to work—they need to work *fast* and *at scale*, under unpredictable traffic surges. This section outlines the patterns, infrastructure strategies, and safeguards necessary to keep throughput high and latencies low, even when you’re sending millions of messages per minute.

Horizontal Scaling Across Tiers

Each component in the system—ingestion API, router, workers, queues—should be horizontally scalable. This enables independent tuning of bottlenecks without over-provisioning the entire pipeline.

- API Gateways / Ingress: Front APIs should autoscale based on CPU/RPS. Use rate limiting to protect downstream services.

- Stateless workers: Delivery workers and routers must be stateless and containerized. Use Kubernetes HPA (Horizontal Pod Autoscaler) or Fargate autoscaling rules based on queue depth, CPU, or latency.

- Queue depth-based scaling: Track backlog size and spawn more workers when depth crosses threshold (e.g., Kafka lag or RabbitMQ queue length).

Sharding & Partitioning

- Shard by user_id: Partition delivery workloads by hashed user ID to preserve locality and prevent contention in DB or token cache.

- Kafka partitioning: Use message keys based on user or tenant ID to route notifications to the right consumer group shard.

- Database partitioning: For large tables like

delivery_logs, use time-based partitioning (e.g., monthly) to maintain query speed.

Fan-out Performance Optimizations

The real bottleneck in high-scale systems is fan-out: mapping one logical message to thousands or millions of device tokens.

- Redis set union: Use Redis to get all tokens for a user or cohort instantly using set operations.

- Batching API calls: Group messages by platform and batch-send (e.g., FCM supports up to 500 tokens per batch request).

- Token deduplication: Ensure no duplicate tokens go into delivery queues. Maintain uniqueness via Redis or pre-processing step.

Caching Strategy

- Tokens: Cached per user ID. TTL refreshed on delivery or login.

- Preferences: Cache suppression rules (e.g., quiet hours, frequency caps) in Redis or memory-local store.

- Throttling: Redis counters (INCRBY + TTL) or token buckets for tenant-level and global caps.

Resilience Patterns

- Bulkhead isolation: Separate worker pools per platform. An FCM slowdown shouldn’t block APNs sends.

- Dead-letter queues: Persistently failing messages (e.g., invalid tokens) are offloaded and logged for inspection without stalling delivery flows.

- Circuit breakers: Temporarily stop retries to downstream providers showing high failure rates (e.g., APNs 503s).

- Backoff strategies: Use exponential backoff with jitter for retry queues. Avoid stampedes during provider throttling.

Throughput Benchmarks (Baseline Targets)

- API ingestion: 2,000–10,000 RPS per node depending on payload size.

- Routing and fan-out: 5,000–20,000 device token lookups/sec with Redis + partitioning.

- Delivery worker throughput:

- APNs: ~5,000–10,000 pushes/min per connection (HTTP/2 multiplexed)

- FCM: ~12,000/min with batch requests

- WebPush: ~2,000/min (single endpoint sends)

Need Help Making Your Push Pipeline Bulletproof?

Building for scale is more than just autoscaling pods. From Kafka tuning to fan-out optimization and circuit breakers, we can help you design a push infrastructure that won’t blink—even on launch day.

Security Architecture

Push notification systems interface with sensitive user data, cloud messaging platforms, and internal services. If security isn’t baked into every layer—from token storage to API interactions—you’re setting up for privacy leaks, abuse vectors, and compliance failures. This section covers the essential security design principles and practices to harden your system end-to-end.

1. Authentication & Authorization

- Internal access control: All internal APIs (ingestion, scheduling, routing) should be protected with short-lived JWTs or mutual TLS—especially if accessible across services or environments.

- API Gateway policies: Use gateway-level IAM or RBAC to restrict which services or users can submit different types of notifications (e.g., transactional vs. marketing).

- Tenant separation: If supporting multi-tenant systems, enforce strict tenant-based isolation in every query, route, and message dispatch flow.

2. Platform Credential Management

Third-party integrations with APNs and FCM require secrets that must be handled with care.

- Store keys in a secret manager: Use tools like AWS Secrets Manager, HashiCorp Vault, or GCP Secret Manager to store APNs keys and FCM credentials.

- Rotate credentials periodically: Build automation to rotate service credentials at least quarterly.

- Limit key scopes: Use service accounts with the least privileges required—no more, no less.

3. Payload Security

- Don’t include PII in push payloads: Payloads are often visible at the OS level (especially Android). Never include names, email addresses, or sensitive tokens.

- Encrypt at rest and in transit: All data in the database and Redis must be encrypted using AES-256 or platform-specific at-rest encryption.

- Web Push payload encryption: VAPID-based Web Push requires elliptic curve encryption (NIST P-256); this must be handled properly to avoid silent delivery failures.

4. Abuse Protection & Rate Limiting

Left unchecked, a push system can be weaponized—either to spam users or to overload delivery infrastructure.

- Per-service rate limits: Use Redis counters or leaky-bucket algorithms to enforce per-API and per-tenant rate caps.

- Quota enforcement: Daily message limits per user or segment (especially for marketing) should be enforced at the routing layer.

- Auth-level audit trails: All requests to send messages should be auditable with service name, user identity, IP, and message metadata.

5. Secrets & Credential Hygiene

- Use environment-level isolation: Never share dev, staging, and prod credentials for push services.

- Disable plaintext logs: Avoid logging tokens, credentials, or push payloads with sensitive metadata in logs. Mask anything that’s not needed for debugging.

- Monitoring for misuse: Alert if usage spikes outside expected patterns—especially high-volume sends from non-prod accounts or sudden FCM errors.

6. Client-Side Protections (Minimal but Worth Mentioning)

- App-level token rotation: Ensure your mobile SDK refreshes tokens periodically and unregisters them on logout or uninstall.

- SSL pinning (optional): If the app connects to your backend directly to register for push, consider pinning to prevent MITM attacks.

Extensibility & Maintainability

A scalable push system is not a one-off build — it must evolve with changing business needs, platform updates, and usage patterns. The architecture must be modular, easy to extend, and safe to maintain without introducing regressions. This section outlines how to structure your push infrastructure for long-term evolution and developer sanity.

1. Plugin-Friendly Architecture

A clean separation between core logic and delivery-specific logic enables faster iteration without destabilizing production flows.

- Delivery workers as plugins: Encapsulate APNs, FCM, and WebPush logic in standalone modules with a shared delivery interface (e.g., `send(token, payload, config)`).

- Message transformers: Implement transformation hooks per platform (e.g., APNs uses `alert`, FCM uses `notification` + `data`).

- Hooks for custom logic: Allow pre-send and post-send hooks in the routing layer for tenant-specific overrides, e.g., custom throttling or A/B logic.

2. Clean Code Practices

Push codebases tend to become dumping grounds of platform-specific hacks unless rigorously maintained.

- Strict interface contracts: Use clear interfaces for delivery modules and message definitions (e.g., `MessagePayload`, `DeliveryContext`).

- Central config management: All rate limits, retry policies, and platform credentials should be externally defined in config files or secrets managers.

- Service boundaries: Avoid coupling routing logic with delivery logic. Treat each as an independently deployable microservice.

3. Feature Flags & Versioning

Support iterative feature development and backward compatibility through strict versioning.

- Versioned message formats: Define message schemas with versioning (`v1`, `v2`, etc.) and support upgrades in parallel without breaking older formats.

- Feature flags for new flows: Roll out new delivery logic behind flags per tenant or environment to minimize blast radius.

- Soft-deprecation model: Mark legacy APIs and message structures for scheduled removal; add logging when used.

4. Testing & Validation Layers

One broken payload can cascade into delivery failures. Safeguard every new release with strong validation.

- Schema validation: Use JSON Schema or Protobuf validation before enqueueing messages for dispatch.

- Contract testing: Define expectations for platform modules using Pact or similar tools to prevent integration drift.

- Sandbox mode: Provide a dry-run or test mode for internal services to simulate push without hitting live endpoints.

5. Maintainability Anti-Patterns to Avoid

- Hardcoding platform-specific behavior: Always abstract platform logic into modules or plugins.

- Mixing tenant logic with global logic: Use scoped services or route-level conditions — don’t inline tenant overrides.

- Lack of observability in new flows: New routes or modules must include structured logs, metrics, and error handling from day one.

Performance Optimization

Pushing millions of notifications is not just about system design — it’s also about how efficiently each component executes under pressure. Performance tuning can reduce latency, improve throughput, and prevent downstream failures. This section focuses on how to optimize your push pipeline at the data, processing, and delivery layers.

1. Database Query Tuning

- Targeted indexes: Ensure indexes on `token`, `user_id`, and `notification_id` in their respective tables. Composite indexes help with fan-out reads (e.g., `device_id, status`).

- Query by segment ID, not raw user filters: Avoid complex join queries during send-time. Precompute segments and cache user IDs or tokens in Redis.

- Connection pool management: Use connection pooling (e.g., PgBouncer) to avoid spiky request bottlenecks during high-volume runs.

2. Token Fan-Out Efficiency

- Batch reads from Redis: When fetching tokens per user or per segment, always use `SMEMBERS` + `MGET` in pipelined batches.

- Bulk delivery loops: Process in batches (e.g., 500–1,000 tokens per iteration) to avoid per-device network overhead.

- Payload template caching: Cache rendered payloads (title/body/metadata) when broadcasting to similar device groups.

3. Async Processing & Worker Tuning

- Message prefetch: Use message prefetch counts (e.g., Kafka `fetch.min.bytes`, RabbitMQ `prefetch_count`) to reduce idle time in delivery workers.

- Delivery batching: FCM and WebPush allow some degree of batching — use this when sending non-personalized campaigns.

- Worker pooling per region: Run isolated worker pools (or pods) per geographic region to minimize cross-region latency and reduce cloud egress cost.

4. Rate Limiting and Flow Control

- Sliding window counters: Use Redis Lua scripts to implement platform-level rate caps (e.g., max 100K/sec per platform or tenant).

- Queue throttling: Dynamically slow down message read rates from the broker when error rates spike (e.g., APNs 429s or FCM quota errors).

- Backoff control: On retries, use jittered exponential backoff to prevent thundering herds from failed deliveries.

5. Frontend Rendering Performance (Web Push Only)

- Payload minimization: WebPush messages should be compact — strip extra metadata, keep to ~2KB or less for fastest render.

- On-click routing: Use query parameters or deep links in WebPush `click_action` to drive faster in-app or browser experiences.

- Lazy render enrichment: Where possible, show minimal notification content and load full details after the user clicks.

6. Monitoring & Feedback Loop Optimization

- Track latency percentiles: Don’t just monitor average delivery time. Track P90, P99 to catch long-tail delivery slowness.

- Feedback filtering: Ingest only critical delivery feedback (token expired, device unreachable) instead of everything, to reduce analytics load.

- Metrics aggregation: Use tools like Prometheus with cardinality-aware labels to avoid metric explosion under high volume.

Testing Strategy

A system that pushes to millions of devices can’t rely on manual QA or isolated tests. Delivery is asynchronous, third-party dependent, and real-time — which means testing must be layered, automated, and failure-resilient. This section covers how to approach testing across all phases: local development, CI pipelines, and production hardening.

1. Unit Testing

Unit tests ensure internal logic behaves correctly across routing, token resolution, and payload generation.

- Test routing logic: Validate user targeting filters, preference enforcement, and platform splitting.

- Mock token sets: Simulate Redis/DB responses with fake token mappings to test fan-out logic.

- Schema validation: Ensure payloads conform to platform schemas — alert/message structure, platform-specific fields, etc.

2. Integration Testing

- In-memory message queues: Run Kafka/RabbitMQ locally (or use test containers) to simulate full producer-to-consumer flow.

- Fake delivery adapters: Mock APNs, FCM, and WebPush with local endpoints that simulate success/failure cases, including throttling or unregistered devices.

- End-to-end delivery flow: Assert that a message submitted to the ingestion API successfully reaches the mocked delivery adapter via queue, rules engine, and worker services.

3. Contract Testing

Contract tests validate that platform delivery modules don’t break due to external API or message format changes.

- Use Pact or similar tooling: Define contract expectations for each delivery module (APNs, FCM, WebPush).

- Versioned schemas: Keep message formats versioned, and verify backward compatibility on changes.

4. Load & Stress Testing

Push systems must hold up under real-world volumes. Load testing helps validate queue performance, Redis hits, and DB contention.

- Simulate campaigns: Inject 10M+ mock messages into the queue to observe worker scaling, error rates, and message latency.

- Spike and soak tests: Validate how the system handles short bursts (100K/min) vs. long-term steady volume (10K/sec for hours).

- Isolate slow services: Monitor which components (e.g., routing, DB writes, token cache) slow down under volume.

5. CI Pipeline Coverage

- Test every push path: Include delivery logic, fallback retries, and failure handling as part of automated CI runs.

- Use synthetic tokens: Generate dummy APNs/FCM tokens for test payloads (never use real ones in CI).

- Lint schemas: Auto-validate all payloads against platform spec before merge using JSON Schema or Protobuf checks.

6. Chaos & Fault Injection (Advanced)

For teams operating at massive scale, introduce controlled chaos to catch edge cases before users do.

- Drop delivery acknowledgments: Simulate network delays or loss between worker and push provider.

- Inject bad payloads: Corrupt fields or violate schema contracts to confirm system catches and isolates the issue.

- Throttle feedback ingestion: Delay delivery receipts to ensure system doesn’t block or crash due to missing status updates.

DevOps & CI/CD

Push notification systems span multiple services, queues, databases, and third-party integrations. That complexity demands an automated, reliable DevOps pipeline that can build, test, deploy, and rollback with confidence. This section covers the tooling and practices needed to keep your delivery pipeline fast, safe, and observable.

1. CI/CD Pipeline Design

- CI stages: Lint → Unit Tests → Contract Tests → Integration Tests → Schema Validation → Docker Build

- CD stages: Canary Deployment → Health Checks → Metrics Gate → Full Rollout

- Pre-deploy schema diff: Auto-check database migrations for compatibility and potential downtime issues (e.g., adding non-null columns without defaults).

2. Deployment Strategies

- Blue-Green Deployment: For stateful services like schedulers or ingestion APIs, use blue-green to switch traffic without downtime.

- Canary Deployment: For delivery workers and routers, deploy to a small pod subset (e.g., 5%) and observe for delivery failures or platform API errors.

- Progressive rollouts: Roll out features using config flags (not code branches) so changes can be toggled at runtime.

3. Infrastructure as Code (IaC)

- Use Terraform or Pulumi: Manage infra across AWS/GCP for queues, DBs, Redis clusters, and VPCs.

- Dynamic environments: Spin up isolated test environments on feature branches to test push flows end-to-end without polluting staging.

- Secrets automation: Store push service credentials in secret managers and inject into pods via sealed secrets or sidecars.

4. GitOps & Configuration Management

- Versioned configs: Keep rate limits, retry policies, and tenant overrides in Git — not hardcoded into services.

- ConfigMap-driven behavior: Inject platform-specific settings into workers (e.g., APNs pool size, WebPush TTL) via Kubernetes ConfigMaps.

- Rollback via git revert: If a config or flag breaks production, GitOps enables fast rollback with auditability.

5. Release Validation & Post-Deploy Checks

- Post-deploy hooks: Trigger health checks or smoke tests automatically after rollout.

- Watch delivery metrics: Monitor delivery rates, error codes, and queue size in the 15–30 minutes post-deploy before scaling fully.

- Fail-fast behavior: If delivery errors spike (e.g., invalid tokens, 403s from FCM), auto-halt deployment and notify on-call.

Need Help Shipping Push Notifications with Confidence?

TextSubHeading

Building a robust push system is one thing—operating it in production without fear is another. If you need help setting up a CI/CD pipeline, secure deployments, and rollback-safe releases for your push stack, we’ve done it before.

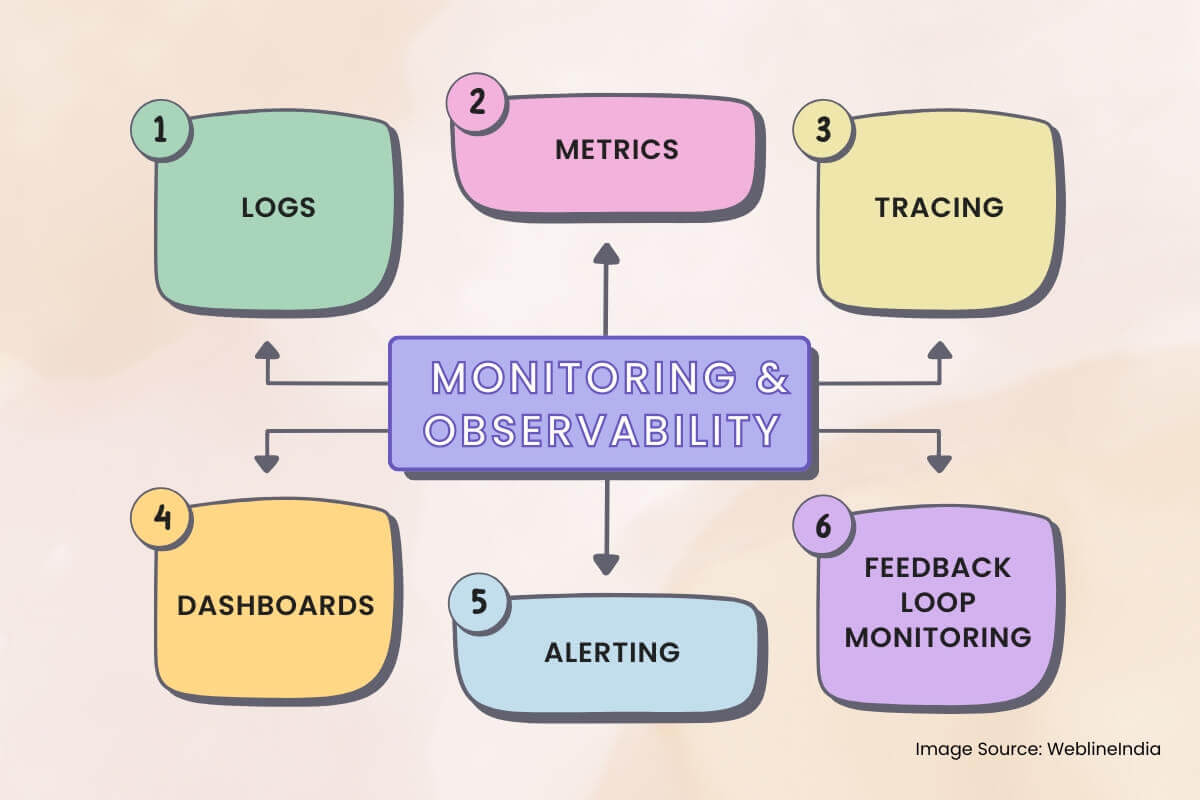

Monitoring & Observability

Push notification systems are asynchronous, distributed, and rely on black-box third-party services like APNs and FCM. Without proper observability, failures go unnoticed, silent delivery drops occur, and debugging becomes guesswork. This section outlines what to monitor, how to trace message flow, and how to build meaningful alerts.

1. Logs

- Structured logging: Use JSON logs with fields like `message_id`, `device_id`, `platform`, `status`, and `error_code` for every stage of delivery.

- Correlation IDs: Tag logs from ingestion → routing → delivery workers with a shared request ID for end-to-end traceability.

- Redact sensitive fields: Mask tokens, user IDs, or payload content in logs—especially on shared or third-party platforms.

2. Metrics

Metrics tell you what’s happening at a macro level. Aggregate them across services to detect platform-wide issues early.

- Delivery throughput: Messages sent per platform, per minute. Break down by `tenant`, `priority`, and `message_type` (transactional/marketing).

- Delivery success/error rate: Track `delivered`, `dropped`, `failed`, and `retrying` buckets — with tags for root cause (`unregistered`, `rate_limited`, `invalid_token`).

- Queue latency: Time from enqueue to worker pickup and worker to platform acknowledgment.

3. Tracing

Traces are critical when messages silently vanish or are delayed. Integrate distributed tracing into every internal service.

- Trace spans: Ingestion → Redis/token lookup → Preference check → Queue enqueue → Worker dispatch → Platform delivery.

- Link logs to traces: Include trace IDs in logs and dashboard links (e.g., from Kibana to Jaeger/Tempo).

- Sampling rate control: Always trace OTPs and failed messages; use probabilistic sampling for campaigns to reduce overhead.

4. Dashboards

Real-time dashboards help operators and on-call engineers understand system health at a glance.

- Platform delivery status: Real-time counts of sent, failed, retried messages per platform (APNs/FCM/WebPush).

- Queue depth: Live visibility into routing and delivery queues — rising queue depth indicates saturation or downstream slowdown.

- Feedback signal analysis: Visualize token invalidations, rate limits hit, and feedback receipts from push platforms.

5. Alerting

- Failure spike alerts: If failed messages exceed a threshold (absolute count or error rate %) within a sliding window.

- Delivery latency alerts: If P95 latency exceeds SLA (e.g., OTP > 3s, campaign > 30s).

- Dead-letter queue growth: Alert if DLQs start accumulating messages — signals a persistent failure path.

6. Feedback Loop Monitoring

Platforms like APNs and FCM send feedback asynchronously. These receipts need their own pipeline.

- Feedback ingestion workers: Consume and store unregistered token events, delivery errors, and rate-limit advisories.

- Metrics extraction: Aggregate feedback signals into dashboards and error-class distributions.

- Token cleanup automation: Trigger background jobs to deactivate or remove stale tokens based on platform feedback.

Trade-offs & Design Decisions

Every architectural choice comes with trade-offs. Designing a scalable push notification system means constantly balancing latency vs. throughput, simplicity vs. flexibility, and reliability vs. cost. This section outlines some of the key decisions made throughout the system and the alternatives that were considered.

1. Platform-Specific Workers vs. Unified Delivery Interface

- Decision: Separate APNs, FCM, and WebPush into isolated delivery services.

- Trade-off: More code and deployment complexity, but much better failure isolation, scalability tuning, and debugability per platform.

- Alternative: A generic unified interface could reduce code duplication but would require extensive conditional logic and reduce observability.

2. Redis Token Cache vs. DB-Only Delivery

- Decision: Use Redis for active token lookup and fan-out during message routing.

- Trade-off: Requires background syncs and invalidation logic, but drastically improves delivery throughput and reduces DB load.

- Alternative: Direct DB reads during fan-out would simplify architecture but wouldn’t scale at campaign-level volumes.

3. Kafka for Message Queuing vs. Simpler Queues

- Decision: Use Kafka or equivalent distributed log for routing and delivery queues.

- Trade-off: Steeper learning curve and infra management burden, but enables high durability, retries, and consumer scalability.

- Alternative: RabbitMQ/SQS are easier to operate but offer less flexibility for large-scale fan-out or delivery guarantees.

4. Dead Letter Queues vs. Inline Retry Loops

- Decision: Use dedicated DLQs for permanent failures and limit in-place retries to 2–3 attempts.

- Trade-off: Slightly higher operational overhead but prevents queue blocking and allows async recovery processing.

- Alternative: Inline retry with backoff is easier to implement but risks overloading downstream systems or delaying messages.

5. Time-Based DB Partitioning vs. Flat Table Growth

- Decision: Partition delivery logs by month to control index size and retention policies.

- Trade-off: More complicated query routing and potential data access fragmentation.

- Alternative: A monolithic table would be easier to manage initially, but would degrade performance and complicate retention cleanup.

6. Trade-offs in Feature Velocity vs. Delivery Guarantees

- Decision: Separate transactional from marketing flows at every layer — ingestion, queues, delivery services.

- Trade-off: More routing complexity and additional infrastructure, but prevents non-critical traffic from degrading critical paths (e.g., OTPs).

- Alternative: A unified flow simplifies routing but risks customer impact during heavy campaign loads or platform throttling.

7. Known Technical Debt Areas

- Stale token cleanup: Needs optimization or automation via feedback loop ingestion.

- Segment pre-materialization: Currently runs just-in-time during campaign send; should be moved to background jobs.

- Rate limiter scaling: Shared Redis instances for rate control could become a bottleneck under global-scale campaigns.

What This Architecture Gets Right

Designing a scalable push notification system isn’t just about sending messages — it’s about delivering the right messages, to the right devices, reliably, and at scale. This architecture is purpose-built to meet that challenge head-on, balancing throughput, control, and maintainability across a highly distributed system.

Key Takeaways

- Decoupled architecture: Ingestion, routing, and delivery are cleanly separated for resilience and scalability.

- Platform isolation: Each push service (APNs, FCM, WebPush) is handled by a dedicated worker set tuned to its quirks.

- Token caching and fan-out: Redis powers fast token resolution, enabling high-throughput message delivery without hammering your primary DB.

- Data durability: Message queues ensure reliable delivery even when platform APIs fail, with DLQs capturing edge cases for later inspection.

- Observability everywhere: Tracing, metrics, and structured logs make debugging delivery pipelines and user-level flows practical at scale.

- Feature-safe extensibility: Modular components, hook-based logic, and schema versioning allow the system to evolve without breaking legacy paths.

Opportunities for Future Improvement

- Fine-grained throttling: Per-user or per-device rate caps could further reduce over-notification and improve UX.

- Segment materialization pipeline: Offloading segmentation to pre-scheduled background jobs would make campaign launches even faster.

- Regional delivery optimization: Geo-local delivery workers could reduce latency and cloud egress cost by keeping platform calls closer to user origin.

This system won’t just work—it’ll hold under pressure, scale with growth, and stay maintainable as use cases evolve. That’s what separates throwaway notification scripts from a real-time, production-grade delivery platform.

Need Help Getting Your Push Notification System to This Level?

Designing and scaling a multi-platform push notification architecture isn’t a weekend project. If you’re looking to build something reliable from day one or fix what’s already showing cracks, we can help engineer the system your users expect.

Testimonials: Hear It Straight From Our Customers

Our development processes delivers dynamic solutions to tackle business challenges, optimize costs, and drive digital transformation. Expert-backed solutions enhance client retention and online presence, with proven success stories highlighting real-world problem-solving through innovative applications. Our esteemed clients just experienced it.