Artificial Intelligence (AI) has transformed industries, reshaped business models, and altered how society interacts with technology. An extensive blog on AI stats and figures in 2024 and beyond by WeblineIndia quotes AI’s extensive use across all industry verticals. Here are some of the stats:

- The AI market is likely to witness an annual growth rate of 37.7% between 2023 and 2030 – GrandView Research.

- The chatbot market is anticipated to touch nearly US $1.25 billion in 2025 which is a significant increase from the 2016 market size that marked US $190.8 million – Authority Hacker.

- The computer vision market grew past US $20 billion in 2023 – Statista.

- The Artificial Intelligence market worldwide is likely to be worth more than US $1.3 trillion in 2030 – Markets & Markets.

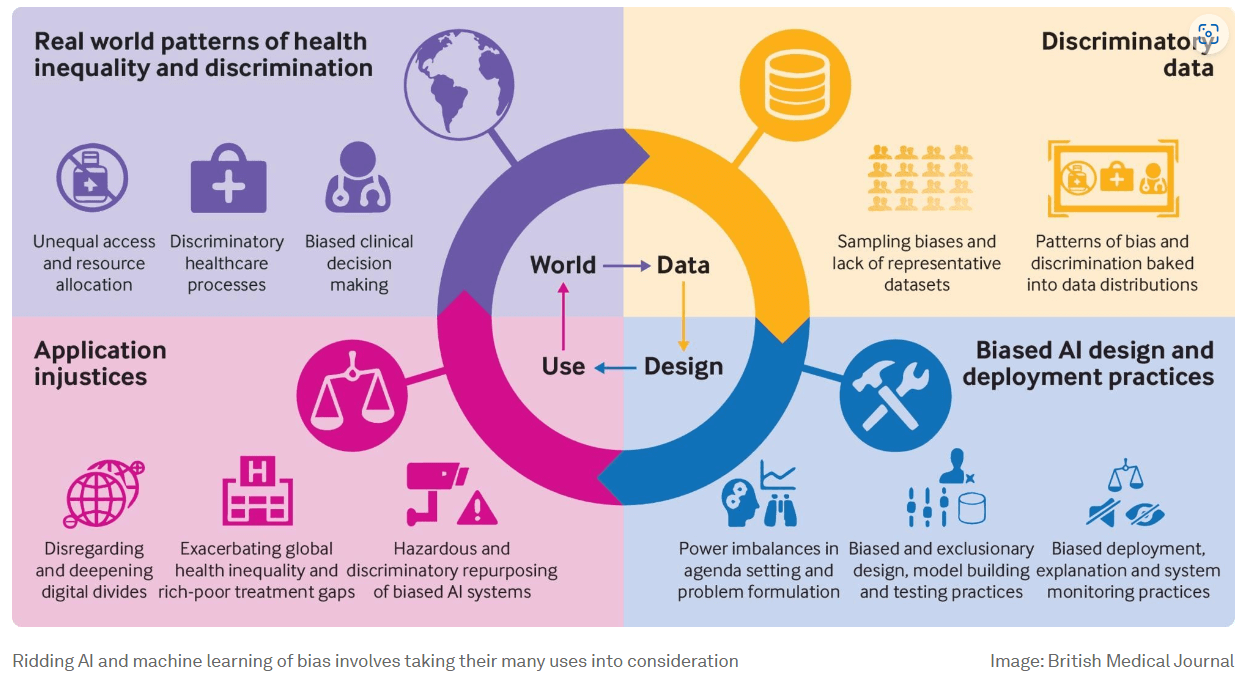

However, as AI continues to advance, one critical issue has emerged — bias. Bias in AI can occur at any stage of data collection, processing, or decision-making, leading to unfair outcomes, perpetuating stereotypes, and deepening societal inequities. IBM shares real-life examples of AI biases along with its reasons and sources in its blog.

Back in 2014, a group of software developers at Amazon embarked on a project to automate the resume screening process for job seekers. However, by 2015, it became apparent that the algorithm was biased against female candidates, particularly for technical positions. As a result, Amazon’s hiring team chose not to rely on this tool due to concerns over bias and fairness.

In a related development, San Francisco’s lawmakers, in 2019, decided to prohibit the deployment of facial recognition technology, citing its tendency to misidentify women and individuals with darker skin tones. These instances highlight the ongoing challenges in ensuring technology operates without prejudice and upholds equality.

This blog delves into the importance of overcoming bias in AI, discusses how bias can infiltrate AI systems, and explores strategies to ensure fairness and equity in AI applications.

Understanding AI Bias

It is essential to outsource AI app development services for business solutions to an offshore IT agency that is reputed and well aware of AI bias. Such agencies are aware of the fact that Artificial Intelligence systems can exhibit bias when the algorithms yield consistently skewed results, rooted in flawed presumptions during the development phase.

Such inaccuracies may originate from the data used, the architectural choices, or the software developers’ own biases. This can result in outcomes that perpetuate discrimination, exacerbating prevailing disparities in critical areas including employment, finance, law enforcement, and medical services.

Looking to develop AI applications to streamline your business processes?

Types of Bias in AI

- Data Bias: AI models learn from data, and if the training data is skewed or unrepresentative, the outcomes will reflect that bias.

- Algorithmic Bias: Algorithms themselves can harbor biases due to the way they are programmed, resulting in favoritism toward certain outcomes.

- User Bias: Users interacting with AI systems can influence the outputs, embedding their personal biases into the system.

- Cognitive Bias: Human cognitive biases, such as confirmation bias or availability bias, can affect how AI is designed, trained, and evaluated.

Addressing these biases is crucial for building AI systems that promote fairness and equity.

Why AI Bias is a Problem?

AI bias is not just a technical issue; it’s a societal one. AI is increasingly used in decision-making processes that affect people’s lives, such as credit scoring, job recruitment, and law enforcement. When AI systems are biased, they can make decisions that disproportionately affect marginalized communities.

Key Concerns:

- Discrimination: AI can perpetuate or even exacerbate racial, gender, and socioeconomic discrimination.

- Injustice: In criminal justice, biased AI models could lead to wrongful convictions or overly harsh sentencing for certain groups.

- Economic Inequality: AI used in hiring or lending could systematically exclude certain demographics, worsening economic inequality.

- Erosion of Trust: If people believe AI systems are unfair, they will lose trust in the technology, hindering its adoption.

Sources of Bias in AI

Understanding the root causes of AI bias is essential for developing strategies to overcome it. Several factors contribute to the development of biased AI systems.

Data-Related Biases

- Historical Bias: AI systems often rely on historical data that may contain entrenched societal biases. If historical data reflect discriminatory practices, AI systems will perpetuate those practices.

- Data Imbalance: When training data is unbalanced—such as having more data from one demographic group than another—the AI will be less effective in making decisions for underrepresented groups.

- Label Bias: During the labeling of training data, human biases can be introduced, affecting how AI interprets and classifies the data.

Design-Related Biases

- Faulty Assumptions: AI systems may be built based on flawed assumptions or stereotypes, resulting in biased algorithms.

- Inadequate Testing: Insufficient testing or lack of diversity in test data can result in biased outcomes, as the AI may not be exposed to varied situations during training.

Deployment Bias

- Feedback Loops: Once deployed, AI systems may reinforce biases through feedback loops. For example, a biased recommendation algorithm may continue to show users the same types of content, reinforcing their preferences and limiting diversity.

- Socioeconomic Factors: AI systems can be deployed in ways that affect different groups disproportionately. For example, automated hiring systems may disproportionately filter out applicants from disadvantaged backgrounds.

Ever wondered how AI can supercharge your business, boost productivity, and drive innovation?

Strategies to Overcome Bias in AI

Although bias in AI is a complex issue, several strategies can help reduce or eliminate it. Here are key approaches to overcoming bias in AI.

1. Diverse Data Collection

Ensuring diversity in the data used to train AI models is critical to reducing bias.

Action Steps

- Collect data that represents a wide range of demographics and viewpoints.

- Avoid relying solely on historical data, which may be biased.

- Use synthetic data or data augmentation techniques to fill gaps for underrepresented groups.

2. Transparent AI Systems

Transparency in AI can help users understand how decisions are made, making it easier to detect and correct biases.

Action Steps

- Ensure that AI algorithms are explainable and interpretable by human users.

- Maintain detailed documentation on how AI systems are trained and deployed.

- Implement model auditing to review algorithm decisions regularly for fairness.

3. Bias Auditing Tools

Using tools specifically designed to detect bias can help mitigate unfair outcomes in AI systems.

Action Steps

- Use bias detection tools, such as IBM’s AI Fairness 360 or Google’s What-If Tool, during model development.

- Conduct regular audits of AI systems post-deployment to catch and correct emerging biases.

4. Diverse Teams

Bias in AI can often stem from a lack of diversity among the people building the systems.

Action Steps

- Ensure that AI development teams are diverse in terms of race, gender, socioeconomic background, and experience.

- Include ethical considerations in AI development, encouraging team members to think critically about potential biases.

5. Accountability Frameworks

Establishing accountability in AI development ensures that individuals or organizations can be held responsible for biased outcomes.

Action Steps

- Develop clear guidelines and ethical standards for AI development.

- Assign accountability to individuals or teams to monitor AI outcomes for fairness and equity.

- Create a system for redress where biased decisions can be challenged and corrected.

6. Regular Model Retraining

AI models can drift over time as new data is introduced, which can lead to the re-emergence of bias.

Action Steps

- Regularly retrain AI models on fresh, balanced data.

- Continuously monitor performance across different demographic groups to ensure fairness.

Challenges in Ensuring Fairness in AI

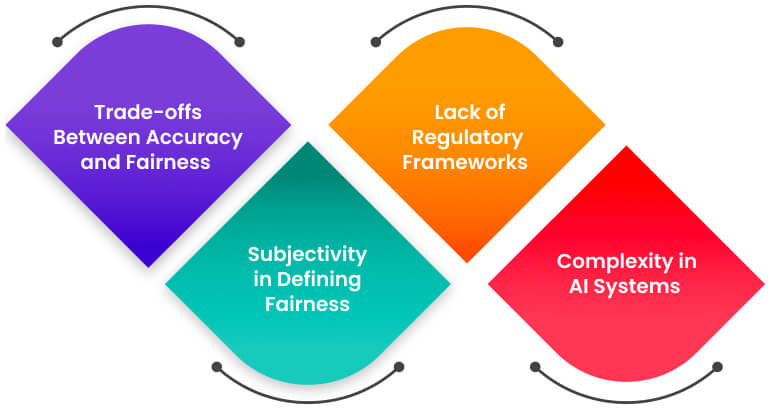

While many strategies exist to reduce bias in AI, achieving complete fairness is difficult. Here are some challenges that make this process complex.

1. Trade-offs Between Accuracy and Fairness

In some cases, optimizing for fairness might reduce the overall accuracy of an AI model. AI developers must often decide how much fairness they are willing to sacrifice to maintain high performance.

2. Subjectivity in Defining Fairness

Fairness itself is a subjective concept. What one person considers fair may not be seen the same way by others. This subjectivity makes it hard to create universally fair AI systems.

3. Lack of Regulatory Frameworks

As AI technology evolves rapidly, governments and regulatory bodies are struggling to keep up. The absence of comprehensive regulations can make it difficult to hold developers accountable for biased outcomes.

4. Complexity in AI Systems

AI systems, especially deep learning models, are highly complex and difficult to interpret. This “black box” nature makes it challenging to identify the root cause of bias and take corrective measures.

Ethical Considerations

Confronting prejudice within artificial intelligence transcends mere technical challenges and becomes a moral obligation. The integration of AI into the process of decision-making should be in harmony with the fundamental principles of society, which include fairness, egalitarianism, and the upholding of human dignity.

It is essential that you hire AI developers from a renowned and experienced IT agency that ensures that their tools reflect the ethical standards and virtues that hold dear as a civilization. This commitment to ethical AI will help foster trust and acceptance among the general public, ensuring that the benefits of AI are shared equitably and justly while protecting the rights and freedoms that are the cornerstone of our society.

- AI Ethics Guidelines: Many organizations have developed ethical frameworks for AI development, such as the European Union’s Ethics Guidelines for Trustworthy AI and Google’s AI Principles. These guidelines emphasize fairness, accountability, transparency, and privacy in AI systems.

- Inclusive AI Development: Ethical AI development must include voices from various communities, especially those who are most affected by AI decisions. This ensures that AI systems do not disproportionately harm marginalized groups.

The Future of Fair AI

The future of AI depends on addressing bias and ensuring that AI systems promote fairness and equity. Here’s what we can expect going forward:

- Improved AI Ethics Training: As awareness of AI bias grows, more AI developers will receive formal training in ethics, ensuring that fairness is embedded in AI from the start.

- Stronger Regulations: Governments will increasingly impose regulations on AI development to ensure fairness, transparency, and accountability.

- Bias-Resistant Algorithms: Researchers are developing new algorithms that are inherently resistant to bias, reducing the reliance on post-hoc bias detection and correction.

Conclusion

Mitigating bias in artificial intelligence is paramount to guarantee that AI advancements are equitable for all. The journey towards equitable AI involves hurdles, yet the adoption of varied data sets, transparent methodologies, vigilant bias monitoring mechanisms, and adherence to ethical standards can markedly diminish instances of bias.

With AI increasingly influencing decision-making, it’s imperative that these systems embody fairness, justice, and impartiality. Proactive efforts in this direction promise a future where AI acts as a catalyst for positive societal change. WeblineIndia’s team excels in the creation of unbiased AI solutions. With a rich background and a team of specialists committed to designing AI that is both fair and accurate, we ensure outcomes that are just and reliable.

Social Hashtags

#AI #EthicalAI #BiasInAI #ArtificialIntelligence #MachineLearning #MachineLearningEthics #ResponsibleAI #DiversityInAI #AITechnology

Transform your business with cutting-edge AI development from WeblineIndia. Discover how our expert solutions can revolutionize your operations.

Testimonials: Hear It Straight From Our Global Clients

Our development processes delivers dynamic solutions to tackle business challenges, optimize costs, and drive digital transformation. Expert-backed solutions enhance client retention and online presence, with proven success stories highlighting real-world problem-solving through innovative applications. Our esteemed Worldwide clients just experienced it.

Awards and Recognitions

While delighted clients are our greatest motivation, industry recognition holds significant value. WeblineIndia has consistently led in technology, with awards and accolades reaffirming our excellence.

OA500 Global Outsourcing Firms 2025, by Outsource Accelerator

Top Software Development Company, by GoodFirms

BEST FINTECH PRODUCT SOLUTION COMPANY - 2022, by GESIA

Awarded as - TOP APP DEVELOPMENT COMPANY IN INDIA of the YEAR 2020, by SoftwareSuggest